Long time no see. I was planning to write this post quite a long time ago, but life (happily) took over. Time’s ripe now, and if I don’t write it soon I might not be able to ever click Done on that reminder. Gamification of tasks is indeed a powerful thing.

In an exercise of fiction, Filip Wiltgren imagined the birth of Bitcoin as being motivated by the attempt of running a secret experiment aimed at exhausting the Universe’s computational power. For reasons that will become clear in a moment, this is highly related with my morning commute.

Briefly, and slightly paraphrasing through my personal interpretation, Wiltgren’s fictional hypothesis was that the Universe itself is a huge simulation where every elementary particle being observed needs to be “rendered” in real time. Then, assuming the amount of computation sustainable by the Universe per time interval is finite, it follows that getting close to the Universe’s maximum computing capacity might cause unexpected behaviors of the physical world and therefore reveal its simulated nature. Just like filling the RAM of a HPC cluster (looking at you, Codon).

If such a limit exists though, it would probably be extremely high. What kind of algorithm could evoke a sheer amount of useless computation, whilist making everyone happy to distribute and run it at universal scale? Bitcoin’s proof-of-work block verification suits these requirements notably well, at least for a fantasy short story, and hence the existence of this piece on Nature Futures that pleasantly entertained me during my morning bus ride.

Wiltgren’s imagination hints at an intriguing perspective*. But why did someone actually start to use cryptocurrencies? I’m not especially interested in arguing about theories of consipiracies, of or visionaries, or Ponzi schemes. Or even of simple technological exploration.

No, my supercifial take is that someone does it because they can. Mostly, because it’s fun. Obviously, I wouldn’t want to miss out the fun.

Well, also I wanted an excuse to try out Dash.

Binance grid bot

Differently from the traditional stock markets, cryptocurrency markets are operational over the 24 hours and every day of the year, which makes them even more suited for automatic trading as no individual human being could constantly manually follow their trends. These untinterrupted streams of data are also freely accessible via open-sourced exchange APIs. For my little test, I chose the Binance exchange and the python-binance wrapper for the Binance REST API.

Relying on not much more than pure and blind automation, how profitable may the game of trades be?

I wrote a bot that connects to the Binance server, authenticates with my Binance API secret key, and monitors the price of a given pair performing buy and sell orders based on a variation of a grid strategy. In a practical use case this bot implements quite a slow strategy, where the frequency of trades can be even just 1 or 2 per day. Thus, the bot needs to withstand reasonable exceptions and it must be able to restart from its previous state if crushes or it’s killed. It also needs to fetch some exchange-specific parameters, such as the minimum tradable amount of a given token, the trade fees, and the decimal precision that is required when placing an order.

I let the bot to run undisturbed for approximately 20 days on a Raspberry Pi sitting at my place. All trades were performed on the Binance spot test network. I put together an interactive dashboard that can be used to monitor the history of actions that the bot performed, as well as the unrealised profit, and to compare the bot performance with the market trend.

20 days run

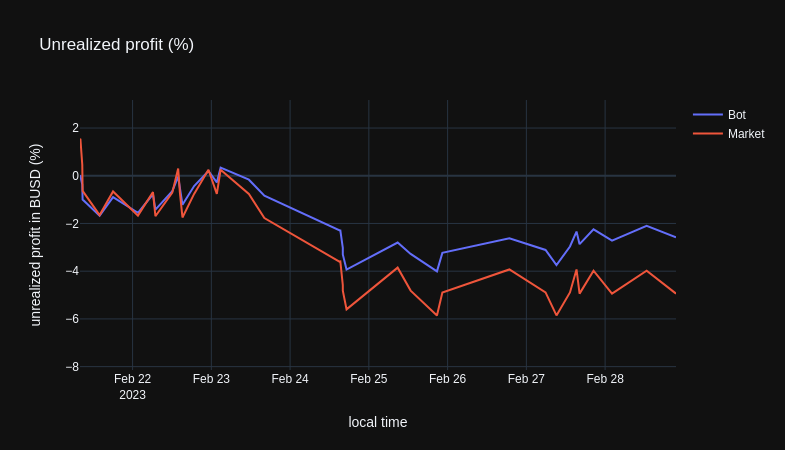

Without fail, that 20 days window was quite a sad and boring timeframe for XRP/BUSD, the market pair I picked for testing, with a drop in price of around -5.0%. My bot though, even employing a very naive strategy and with non-optimised hyperparameters, closed at around -2.6%. Still sad. Nevertheless, having a look at the profit graph, with the bot performance and the market performance side by side, I noticed a few things:

- The bot behaved as expected (yeah not remarkable… but not obvious either).

- In the first two thirds of the run, the bot, even if it was not making harmful decisions and it prevented larger losses, did not take the best opportunities.

- In the last third, approximately from February 21st, the market behaviour started to appear “in sync” with the bot strategy. The oscillations in the price fit well the grid parameters and the separation between the bot performace (blue line) and the market performance (red line) can be seen to become larger and larger with every trade (figure below).

Based on these insights, it might be useful to not only dynamically adjust the center of the grid, as the bot currently does, but also its “width”, i.e. the price change that would trigger a buy or sell order. This may be done by memorising a rolling window of past prices, and counting how many times the price would have crossed the grid’s thresholds when set at different intervals from the mean price. Or, in other words, performing a “live” backtesting of the strategy to constantly assess how the bot would have performed in the near past with different settings, and to tune the current grid’s few parameters accordingly. While this is fairly easy to implement, it would be probably good to first test whether it makes sense by assessing the autocorrelation of the parameters’ performances in the time window x given the performance of those same parameters at time window x-1, in a given pair. This metric could also be a criterium to choose the most profitable pairs for the strategy.

Code

Maybe I’ll make a package. Probably not.

Meanwhile, the scripts can be found on GitHub.

p.s.

*Sorry, but now that I thought about it, I cannot unthink it. If one pushed the Universe’s capacity to compute close to its limit, wouldn’t it be easy to imagine that the computation would simply slow down, implying the whole reality would be slowed down, including the observer itself, which therefore would not ever be able to perceive any abnormality? That’s the problem of being part of the things that we tried to measure. Did you consider it, Filip?